Only avatars created by Unity model importer are supported UMotion

I have a rigged, working Humanoid model in Unity 2019, its fully animated and works as expected, yet when i drag it onto the pose editor I get the above error message. Any clues what I might need to change? The model can be exported and imported into unity as is so i dont understand the error.

Answer

Hi,

thank you very much for your support request.

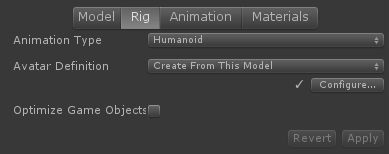

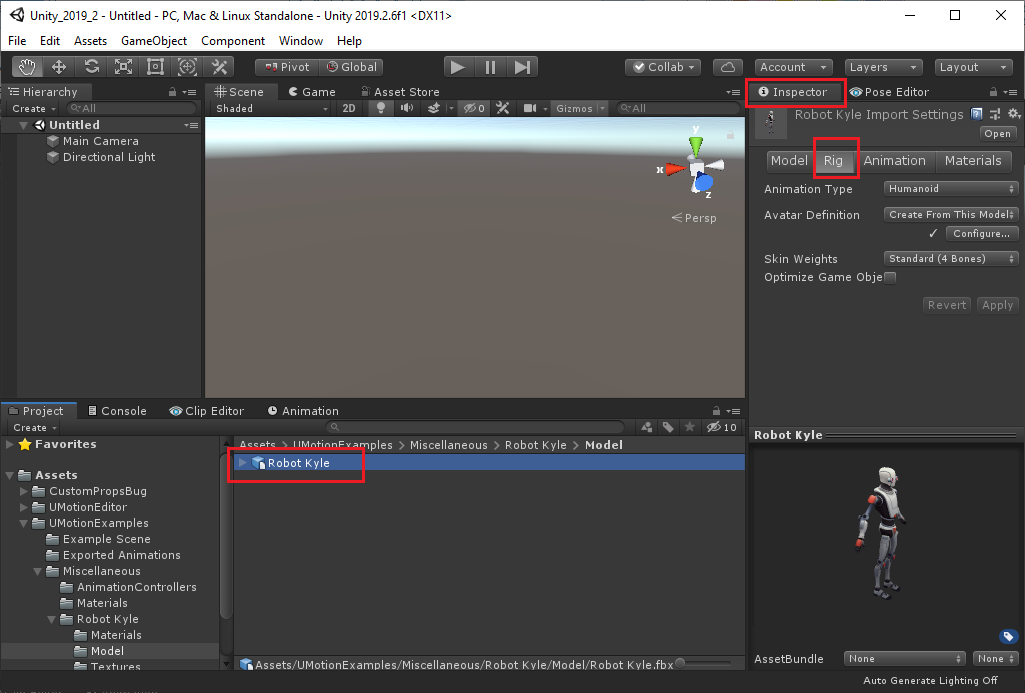

Please make sure that in the "Rig" tab of the import settings (shown in the Inspector when selecting your character's source file in the "Project Window"), you have set "Avatar Definition" to "Create from this model". This is to ensure that Unity generate's a humanoid avatar from your character.

UMotion requires a humanoid avatar that has been generated by Unity's model importer as it contains more information (needed to guarantee correct humanoid *.anim export) then a humanoid avatar generated via script.

Please let me know if you have any follow-up questions.

Best regards,

Peter

Hi,

the "Rig" tab is shown in the Inspector Window when you have your character's source file selected in Unity's Project Window:

Best regards,

Peter

You are selecting the prefab but not the original model. Search for the model's source file (it usually has an *.fbx file ending). If your character doesn't have a source file (because it is generated inside Unity by some character generator scripts), you need to make an *.FBX file out of it. You can do this by using Unity's FBX Exporter (you can install the FBX Exporter via Unity's Package Manager; don't use the version from the asset store it is outdated). Once you've exported your character to *.FBX, drag the *.FBX into your scene and use that character in UMotion (instead of the prefab).

Let me know if you need further assistance.

Best regards,

Peter

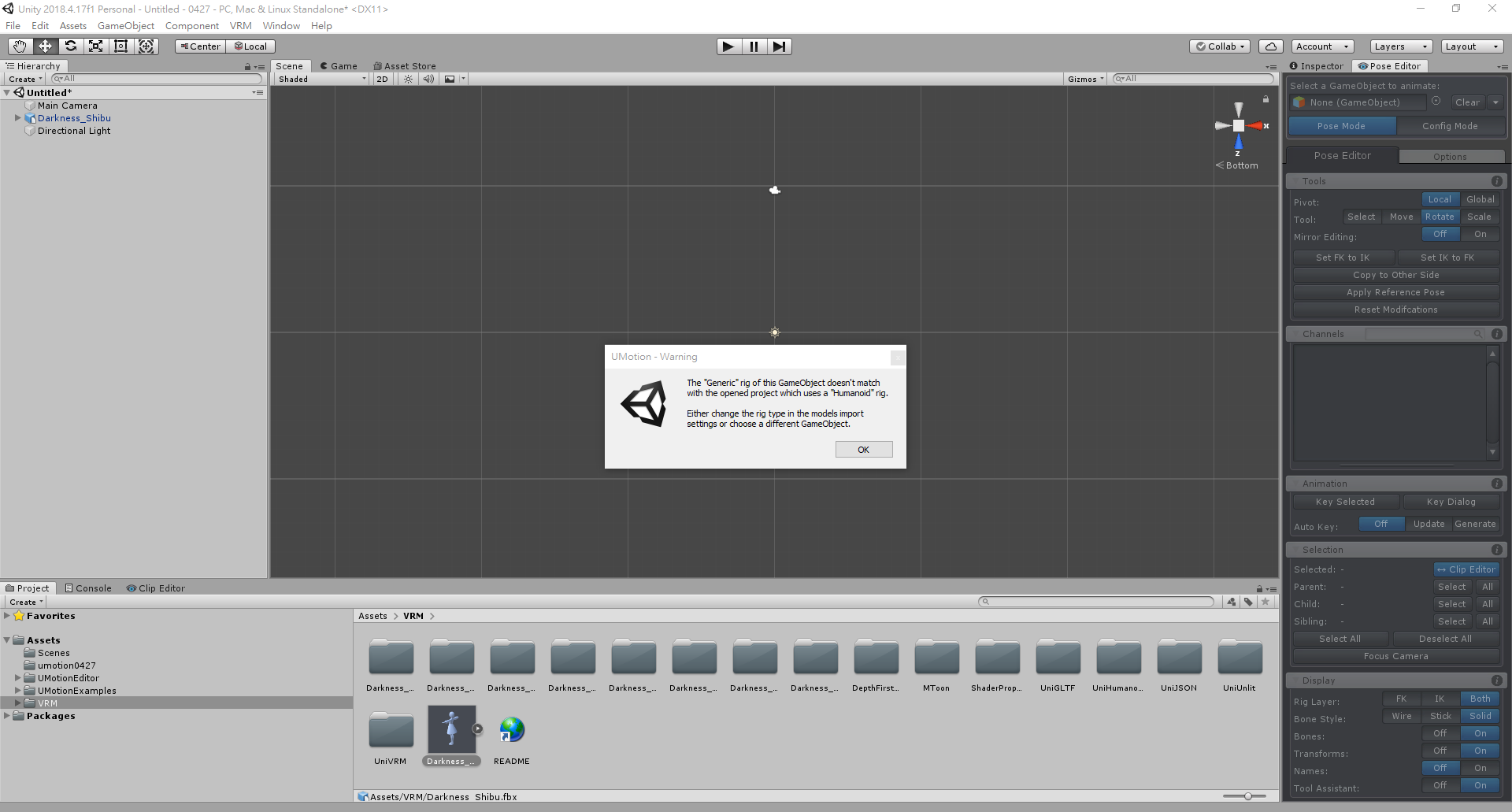

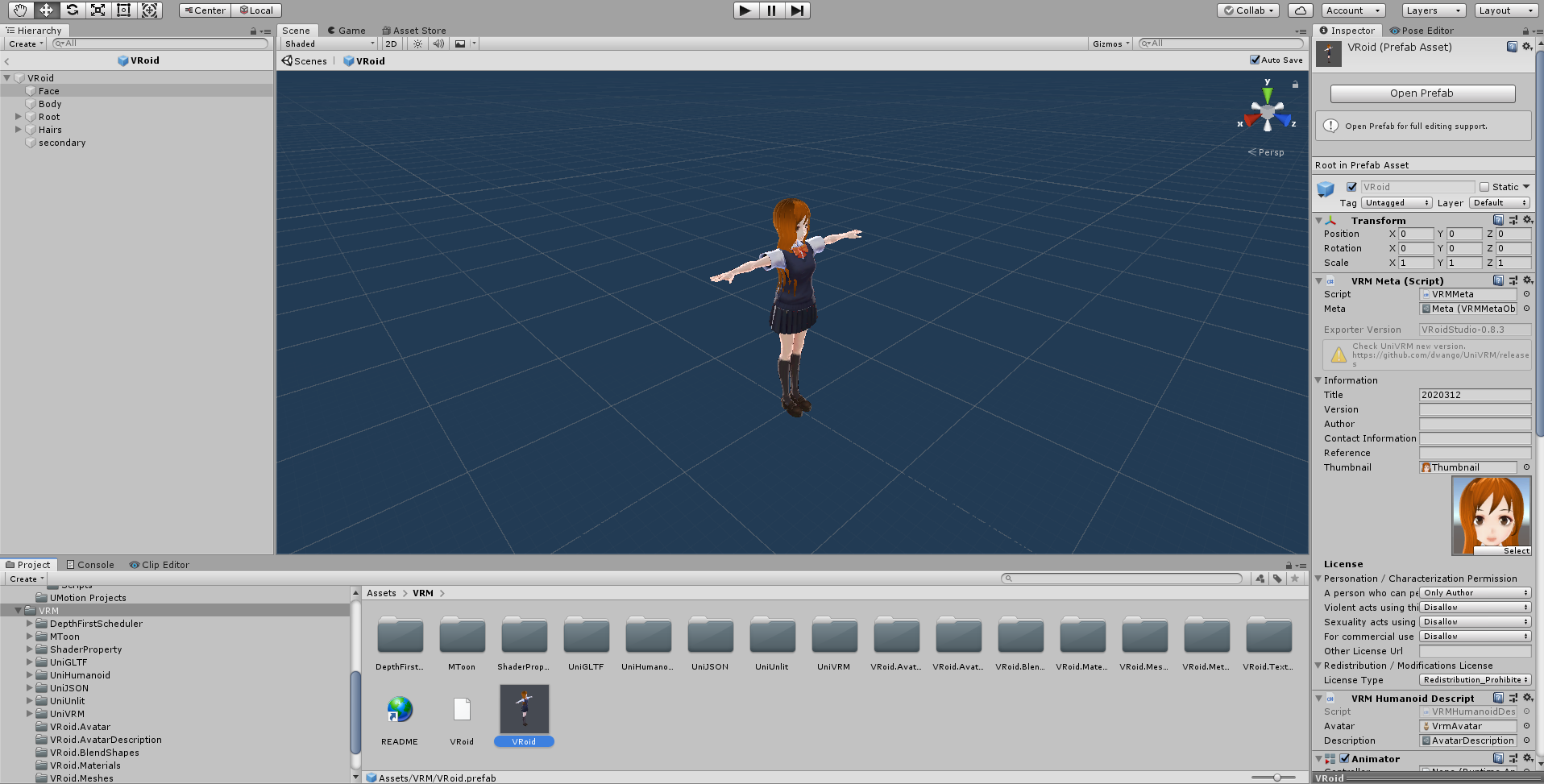

I have changed "* .vrm" to "* .fbx". But still can't, can I ask what is wrong?

You need to configure your character to "humanoid". Open the Inspector (while having your character selected in Unity's Project Window) and look at the "Rig" tab, change "generic" to "humanoid".

You can find all video tutorials in the manual's dedicated "video tutorial" section or on our Youtube channel: https://www.youtube.com/watch?v=beH_hB4YwaY&list=PLI-5k9R34MAzGs-FomlWDZQXF93w6qyfD

I am adding to this thread as I am also attempting to use UMotion with VRoid Studio characters.

Warning: I am relatively new to Unity, but learning fast!

I am trying to work out the best workflow using Unity and VRoid characters (and Unity models, terrains, etc) to create simple animated videos. So the goal is to make the characters act and generate a MP4 file, not build a game.

I started using the Unity built in timeline editing support (with the new 2020 Rigging Animation constraints), but it is excruciatingly painful. The screen only reflects changes I make in the animation clip if I am lucky half the time. This makes animation incredibly painful and slow - which is why I purchased your Pro extension. Anything that could speed up the process would save me a lot of time and hair pulling.

Background:

VRoid Studio is free software allowing you to create Anime style characters relatively easily. It exports characters in VRM format, which can be loaded into VR Chat and other applications. There is a UniVRM package for Unity that can import VRM creating the prefab (as above). It uses the "VRM/MToon" shader for a more "cartoon" feel, but which also supports transparency in the texture. I also extended the shader to blend between textures, so I can make the face blush (or sweat, or other expressions enhanced by texture changes). I also added lots more blendshapes to the face. I also add extra components to objects, MagicaCloth for better hair blowing in the wind etc. I do the same flow to every character.

I hit exactly the same problem as above, where you recommended to export to FBX and reimport that file back in.

I did an FBX export and loaded the FBX file back in, but all the materials had converted to the "Standard" shader. This lost the transparency support. It also lost the VRM/MToon shader so the texture blending support is lost. So I "extract materials from FBX" so I can modify them, then changed all the shaders back to VRM/MToon, changed Opaque to Transparent, and it seems to be coming back to where I started from... and I can drag the character now into the Pose panel! Success!

It also found all the blendshapes, and included them - so that is good.

There are a few things I have not worked out how to do yet (but have not finished reading the manual yet).

* Have a script on the character that I want to animate properties for as well (the float lerp between normal face texture and blush texture - I have a component on the root character with a property of "blush lerp" where 0 = normal and 1 = full blush. I have not worked out yet whether your tool can handle animation of float script properties

* Do you support avatar masks? I was planning on having an override tracks for the facial expression, left hand, and right hand. So I can have the main body walk etc, then start layering on top other animation clips (e.g. for hand positions - point, make a fist, etc).

I was curious to see if you thought I was heading in a sensible direction or not. It seems to be a bit of extra work, but doable.

Hi,

thank you very much for your detailed write-up.

Doing an FBX export is the correct way you chose. You can assign any materials to the fbx character's instance (even the original ones that have been created by the VRM importer plugin). No need to extract and manipulate the ones that are inside the FBX.

* Have a script on the character that I want to animate properties for as well (the float lerp between normal face texture and blush texture - I have a component on the root character with a property of "blush lerp" where 0 = normal and 1 = full blush. I have not worked out yet whether your tool can handle animation of float script properties

A "custom property" constraint in "component property mode" can be used to animate any properties (of custom scripts or built in components). That's what UMotion automatically creates for your blend-shapes too (you could also do this manually). More information can be found in the manual at:

* Do you support avatar masks? I was planning on having an override tracks for the facial expression, left hand, and right hand. So I can have the main body walk etc, then start layering on top other animation clips (e.g. for hand positions - point, make a fist, etc).

UMotion creates just regular animations (as if they are created natively in Unity or in any external 3D modeling application). That means the animations are fully compatible with any of Unity's animation features. That means you can just create 2 separate animations: The "base" animation and the "override" animation. The mixing is then done by the Animator/Timeline (and the assigned avatar mask) when you press play in Unity (or preview in Timeline). You can also mix 2 animations together into one in UMotion by creating an override layer inside UMotion (but this is going to export as one animation!).

I was curious to see if you thought I was heading in a sensible direction or not. It seems to be a bit of extra work, but doable.

Yes this is the correct way of doing it. Beside the FBX export (which is two clicks usually), it shouldn't involve any extra work.

Please let me know in case you have any follow-up questions.

Best regards,

Peter

Thanks. I am trying to automate the workflow as much as possible. I have to do this to like 30 characters, and redo it each time I change the original artwork. So I am trying to automate as much as possible. I could not update the material in the FBX imported model without extracting them first - but maybe I can just reassign to the original ones....

Ah! I missed custom properties. I will give that a go, thanks!

The Avatar masks are a bit easier than just doing the appropriate layers. I was hitting problems (mainly with 3rd party clips) as they would update lots of properties - if I missed one in my clip, it would go strange. Masks seemed to help control what layers were from what without setting every single value. (But remember I am relatively new so may be doing something stupid along the way.)

I am currently planning on possibly 7 layers of clips to overlay:

- full body movement (walk, run, sit, stand and wave, etc),

- an eye "look at" controller with lerp properties to blend so I can look at character 1, then character 2 (the eyes track the other character if they move) with manual X/Y movements as a fallback

- a head turn controller similar to the eyes (eyes can move with or without head turning)

- Facial expressions (blendshapes for eyes, eyebrows, mouth) plus textures - around 70 properties here alone!!

- Not sure what to do about talking yet

- Not sure if to have an arms override layer - e.g. so can wave if walking, sitting, jogging etc

- Left and right and pose clips (not sure if to merge with arms yet)

I will give it a go and see what happens for a first character. I have some clips made, but it was too painful. Hopefully it will go faster now.

I could not update the material in the FBX imported model without extracting them first

The material inside the FBX is read-only, but you can assign any other materials to your characters (when instantiated in the scene or when you already made a prefab out of them).

Thanks. I am trying to automate the workflow as much as possible.

Unity's APIs allow you to automate quite a lot of things. As Unity's FBX Exporter comes with source code, you can event automate the process of exporting the character to FBX. Also the instantiation of the character (and generating new materials) can be automated. But all of that requires some "intermediate level" C#/Unity API skills.

I am currently planning on possibly 7 layers of clips to overlay:

Sure, you can combine masks/layers to your desire. As the clips generated in UMotion are regular *.anim clips, all of that should work as with any "native" animations.

I wish you all the best and let me know in case you have any follow-up questions.

Best regards,

Peter

Hi, I bought the pro version and I am also getting this error. I generated an avatar from the fbx file so I don't understand why this is happening. Any thoughts?

Please double check that you are really using the avatar from the fbx file:

- Go into Unity's project window and navigate to the character's fbx file you want to animate.

- Drag & drop that FBX file into your Unity scene.

- Now create a new UMotion project and assign the character you just dragged into the Untiy scene to the UMotion pose editor (by clicking and dragging the character from the Unity hierarchy window to the UMotion pose editor's GameObject field).

Please let me know in case you have any follow-up questions.

Best regards,

Peter

Thanks for your fast response, Peter.

I tried dragging the fbx to the UMotion field and that works. Then, when I duplicate that avatar and set it on a different GO with animator and drag THAT to the UMotion field, I get the error. So it seems to be the case that, duplicated avatars are going to spoil the process? I never use the raw fbx in the editor, I always unpack it and pick it apart and remove pieces from it.

However this is not really a problem if the clips I create/modify with UMotion are still useable on the GOs using the duplicated avatar. I'm going to try that now -- hopefully that works, or I just bought this for no reason.

Follow-up: It did work! So notwithstanding the import oddities, will be a very useful tool on this project. Thanks again, Peter.

Ok I checked this in the UMotion code. As long as the avatar is still "inside" an fbx file (i.e. it's shown when expanding the *.fbx in Unity's project window) you should be good to go. The problem is that by duplicating it you are extracting it and it gets a standalone file. If you avoid that, everything else of your workflow should still work. I also would recommend keeping the avatar inside the FBX because that ensures that your avatar is updated when you alter the avatar settings in the FBX importer inspector.

Please let me know in case you have any follow-up questions.

Best regards,

Peter

Customer support service by UserEcho

Hi,

thank you very much for your support request.

Please make sure that in the "Rig" tab of the import settings (shown in the Inspector when selecting your character's source file in the "Project Window"), you have set "Avatar Definition" to "Create from this model". This is to ensure that Unity generate's a humanoid avatar from your character.

UMotion requires a humanoid avatar that has been generated by Unity's model importer as it contains more information (needed to guarantee correct humanoid *.anim export) then a humanoid avatar generated via script.

Please let me know if you have any follow-up questions.

Best regards,

Peter